Welcome to the K-Optional Newsletter, where we post about interesting real-world tech solutions. If you like this type of thing, consider subscribing at the bottom where it says “Get the latest in full stack engineering”. Feel free to email jack@koptional dot com with comments or to talk software architecture.

An SPA problem and a roundabout solution

You know when they say, “it’s so crazy, it just might work”? Well I don’t believe that. I say go for the airtight plan - Michael Scott.

One of our clients runs a financial marketplace single-page application (SPA), hosted on static file-serving infra. Static SPAs dynamically construct page content in the browser after load. In contrast, server-rendered applications send all or most HTML within the initial page response.

There are pros and cons to the static SPA:

- Pro: Eliminating server code execution can be a reliability advantage since file serving is tried and true. Site outages basically never happen.

- Con: A static SPA often accompanies an expansive JavaScript bundle (i.e. megabytes).

- Pro: Content-delivery networks can be more effective at optimizing static SPA delivery than say, a server endpoint (though the gap is closing).

- Con: Social media previews (and sometimes SEO) depend on HTML metadata being present at render.

That last one proved to be a major obstacle; users of our client’s app often post marketplace listings on Twitter and Discord, and the SPA meant no attractive preview.

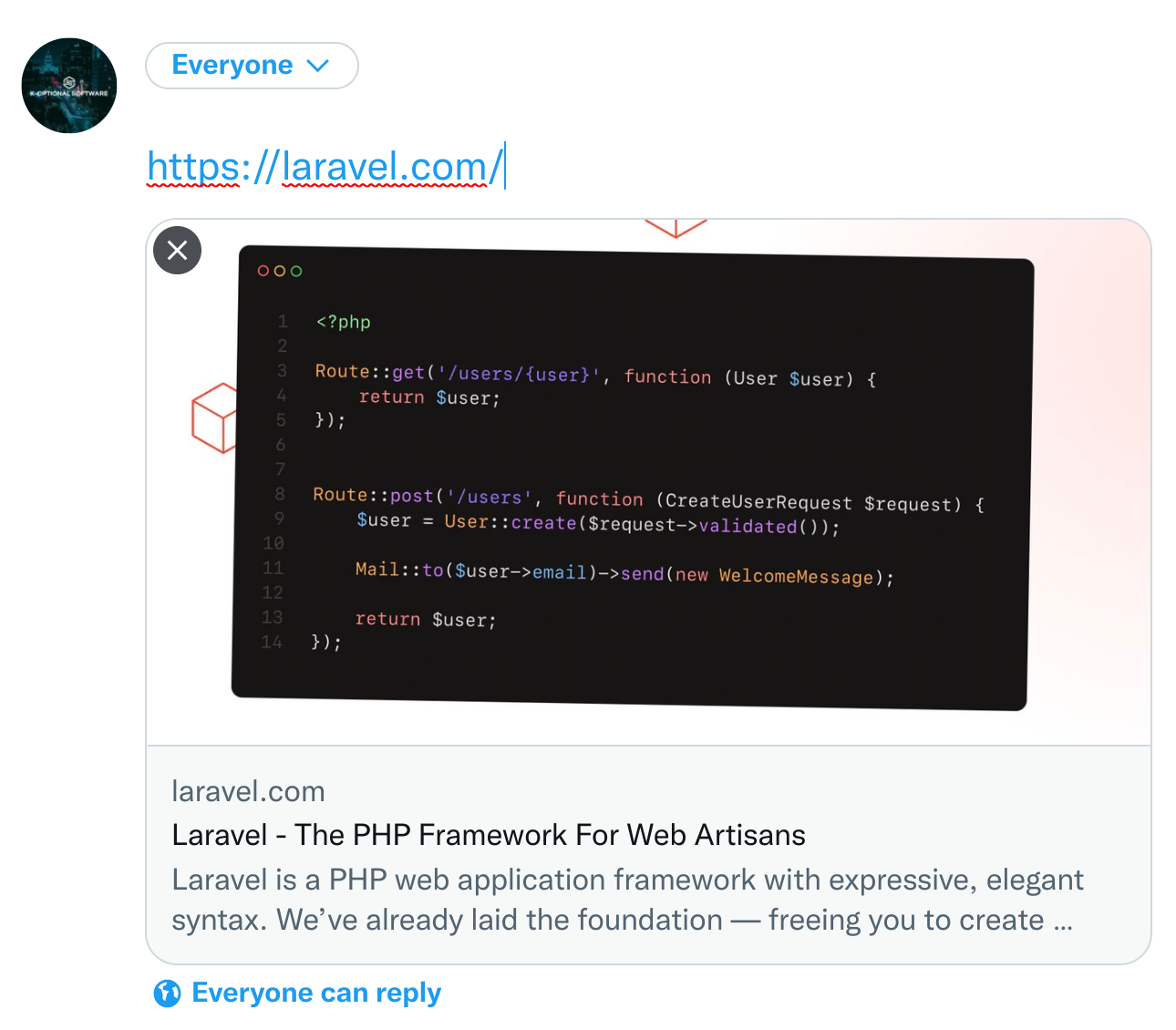

An example of a rich preview that Twitter generates.

An example of a rich preview that Twitter generates.

Potential workarounds

Dedicated subdomain for social posts

We looked into setting up a separate app that lived on a social. subdomain. This app would render on the server and appear in prefilled Tweets. Such a URL would transmit the needed meta HTML, and redirect to the main site on load.

Though simpler than you might expect, using a different site felt like a big compromise. After all, we couldn’t even be sure that users wouldn’t just post the link from the address bar anyway.

Migrate to Next.js

We also could swap this pure React (craco) application to a solution like Next.js which enables server-side rendering without sacrificing anything in terms of reliability.

Except the rewrite would take too much time considering the size of the codebase. This is also felt like a fairly disruptive play just to achieve of social previews, though I’ll admit Next.js has other benefits.

Prerender HTML

Another option we prospected was generating HTML ahead of time for listings that users create or update. An AWS S3 Bucket can transmit HTML files corresponding with the URL a user posts, falling back to the top-level application index.html.

This option compelled us but not for two obstacles:

-

It meant provisioning a service that listened to listings, generated HTML, and saved to a file-server, in other words, mixing application and infrastructure code.

-

The HTML would need to contain application JavaScript and CSS, and the

cracobuild process generates a different script / StyleSheet each build. So a fresh deployment could potentially invalidate all prerendered listings.- We modified the build process to use the same file name each time. But this level of configuration isn’t well supported in craco and we would also lose some degree of browser cache functionality (cache breaking via query parameter is possible, but limited in prerendered HTML).

The proverbial cheese cart

It turns out that social media preview bots pass along identifying UserAgent HTTP headers.

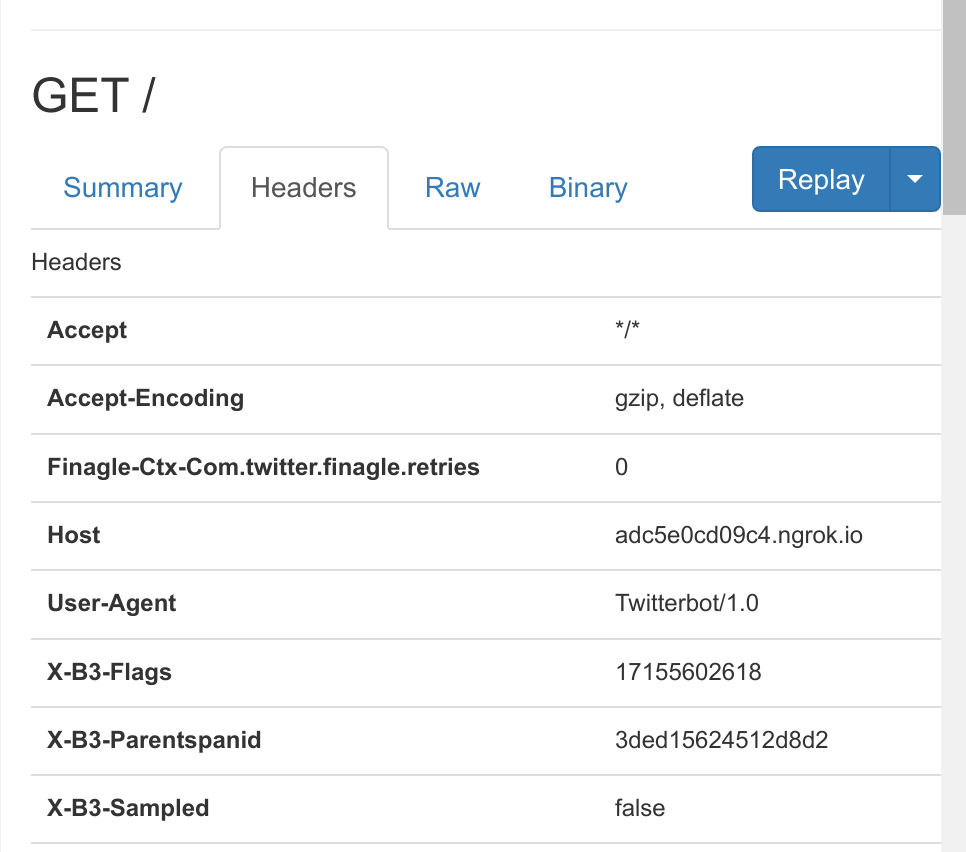

A Twitterbot request, courtesy of ngrok

A Twitterbot request, courtesy of ngrok

Although, one should never use UserAgent headers for authentication purposes- anyone may set arbitrary values- they can provide useful information. We collectively figured we’d leave the current app and devise a special response just for social media bots. And a Cloudflare Load Balancer at the DNS level meant we needed to make zero changes to the application code!

The load balancer simply routes social media bot requests to a 15-line Express.js application which *opens the actual site with Puppeteer, renders the page, and sends a response with the HTML*. We don’t even have to redefine contingent meta HTML- we get it free from our client-side React Helmet integration.

For someone who’s interfaced with web content in many automated ways- Cheerio, Beautiful Soup to name a few- it feels absolutely ridiculous to open a browser even headlessly to respond to a single request. But it turns out we could keep response times under 2 seconds without cache and a fraction of that cached, both well within the expectations of a social bot, and at no loss of user experience since normal users would never see this page.

Much credit to our client, Austin, for originally suggesting the load balance angle.

Tools we’re watching

- DevEnv for consistent developer environments- kind of like a venv for operating system dependencies!

- Diagrams as Code for composing instructive cloud architecture diagrams for our clients