Our Transition Away from Firebase: The Reasons Behind Our Move

04/30/2023 Update: This post originally explained our rationale for diversifying our infrastructure beyond Firebase. At popular request, we’ve created a follow-up post with the do’s and don’ts of building a business on the Firebase platform.

A special thanks to my friend and colleague Austin Kline for proof-reading and collaborating on many workarounds mentioned in this article.

We’ve launched dozens of applications on Firebase, utilized nearly every facet of the platform, and designed a playbook for scaling gracefully. Suffice it to say, it’s proven an invaluable tool for K-Optional Software. As recently as March 2022, our developers were cheering innovations like Firebase Extensions. Unfortunately, three major developments in the past few months have polluted the developer experience and consequently K-Optional will shift towards alternatives for green projects.

Firebase: the good

The Google-owned platform-as-a-service (PaaS) enables builders to punt on several infrastructural decisions: content-delivery networking, NoSQL database event handlers, and network topology to name a few. True, a bespoke bundle of native services built on AWS / Azure / GCP bests the Firebase suite in pure performance. But when we consider developer hours and maintenance costs, Firebase is often a logical play.

The original Firebase Realtime Database felt fairly revolutionary, especially before the mass acceptance of WebSockets or the emergence of Server-Sent Events. You could write applications in sync with real-time data without heaps of transmission logic. Those who have home-rolled messaging applications with long-polling requests sure appreciated it.

In fact, there are many aspects of Firebase we love:

- With Firestore, many client state-management challenges disappear, especially pertaining to data freshness.

- You get real-time experiences for free.

- Firestore’s document / collection architecture: it forces one to be deliberate with data-modeling. It also mirrors an intuitive navigation scheme.

- Ditto for relational data in Firestore. Unlike MongoDB, it is impossible to do anything remotely similar to a SQL join. Therefore, developers must embrace the ethos of NoSQL by distributing relational data ahead of time.

- The Firebase suite is conducive to fast prototyping that can scale. Handle data connections from the client, harden security rules before releasing to production, and use Firebase Functions for sensitive logic.

- Cloud Firestore Security Rules are enjoyable to write and a solid model for how to think about client-server security.

- Authentication out of the box is nice. (Built-in Firebase email-verification is, in our opinion, a poor experience though).

- We’ve actually found Firebase Hosting more straightforward when it comes to CI/CD than AWS S3 + Cloudfront because there’s a simple command for setting this up for a repository.

Firebase: the not so good

On the flip side, there are also quite a few pieces of Firebase that have given me pause:

-

Firebase mandates Google / GSuite sign-in- we like to distribute our vendors and services.

-

Firebase Hosting doesn’t expose granular file control; you can either deploy an entire application or nothing at all. Perhaps niche, but we’ve run into limitations with static page generation and debugging CDN issues.

-

Firestore index creation is slow and ungraceful, taking way longer than an equivalent Algolia index.

-

Being closed-source, you don’t have the implicit assurance that Firebase will always be around (like Parse), nor can you reliably depend on a specific API version.

-

You also therefore can’t truly run Firebase locally. Sure, there are Firebase Emulators, but these are slow, tough to debug, and generally lacking; random things often fail under sufficient load which you might expect a robust local environment to withstand.

-

The Firebase CLI is pretty gated:

- You can’t do simple things like enable Firestore, other than from the dashboard.

firebase login:cideliberately inhibits piping an auth key. I’d love to dofirebase login:ci | xargs -I {} gh secret set FIREBASE_TOKEN --body="{}", but alas, we get extra lines before and after. (see below for an ugly workaround we used)

Aside: Speaking of the gated Firebase CLI, here are two of our oft-used workarounds which you may find useful.

Extracting a machine-readable CI token

Yes, I’d like to pipe my CI token directly into my secret manager.

citokenRaw=$(firebase login:ci)

citoken=$(echo "$citokenRaw" | tail -n 3 | head -n 1)Web configuration into .env

This little number downloads a Firebase web snippet and transforms it into something fit for an .env file. The web snippet configures your site to use a particular Firebase Application, and using environment variables allows us to preserve scaffolding across projects.

# ugly ugly ugly

fbKeysObject=$(\

firebase apps:list --project=$FB_PROJECT --non-interactive --json \

| fx '.result[0].appId' | xargs -I {} firebase apps:sdkconfig WEB {} \

| sed '/{/,/}/!d ' \

| sed -r 's/;|firebase\.initializeApp|\(|\)//g' \

)

# Build a .env file

echo "$fbKeysObject" | jq '.projectId' | xargs -I {} echo "REACT_APP_FB_PROJECT_ID="\"{}\" > .env

echo "$fbKeysObject" | jq '.appId' | xargs -I {} echo "REACT_APP_FB_APP_ID="\"{}\" >> .env

echo "$fbKeysObject" | jq '.storageBucket' | xargs -I {} echo "REACT_APP_FB_STORAGE_BUCKET="\"{}\" >> .env

echo "$fbKeysObject" | jq '.locationId' | xargs -I {} echo "REACT_APP_FB_LOCATION_ID="\"{}\" >> .env

echo "$fbKeysObject" | jq '.apiKey' | xargs -I {} echo "REACT_APP_FB_API_KEY="\"{}\" >> .env

echo "$fbKeysObject" | jq '.authDomain' | xargs -I {} echo "REACT_APP_FB_AUTH_DOMAIN="\"{}\" >> .env

echo "$fbKeysObject" | jq '.messagingSenderId' | xargs -I {} echo "REACT_APP_FB_MESSAGE_SENDER_ID="\"{}\" >> .envEnd aside.

To summarize, most existing problems with Firebase spawn from Google’s ownership and primarily annoy me. It’s the recent developments that have been cause for reconsideration…

The writing on the wall

Three recent developments with Firebase have convinced us that the future is with tools like Supabase.

Forced migration to GCP via removal of Firebase features

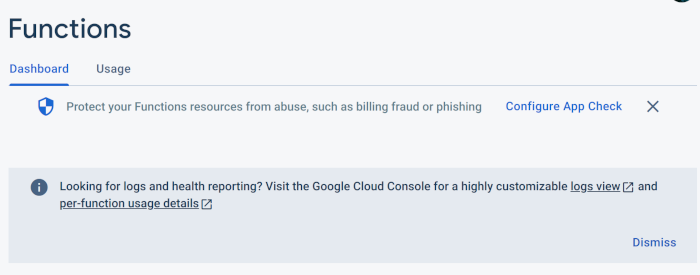

In the past few months, Firebase dropped Cloud Function logs from the dashboard. If you want these you can follow links to a Google Cloud Console dashboard.

Well if it’s highly customizable, I suppose it’s a favor to me

Well if it’s highly customizable, I suppose it’s a favor to me

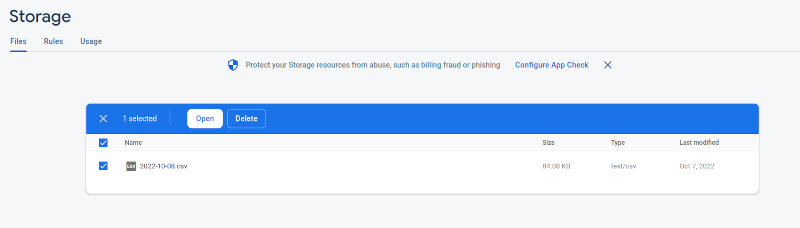

I also noticed that on the Firebase Storage dashboard, you can’t download files; you must navigate over to the separate GCP platform.

On the Firebase Dashboard, I can’t download this file. Counter intuitively, “Open” does not allow one to download.

On the Firebase Dashboard, I can’t download this file. Counter intuitively, “Open” does not allow one to download.

Downloading is straightforward on Google Cloud Console.

Downloading is straightforward on Google Cloud Console.

It seems that GCP is cannibalizing the Firebase developer environment. From an ops perspective, that makes sense. But axing the simplified cloud experience of Firebase removes much of its value; our clients don’t want to make sense of GCP. On recent Firebase projects I wondered if we would be better off launching bespoke services instead. I’m sure Google wouldn’t mind developers abandoning Firebase for pure GCP.

Recent Cloud Function deployment rate limits

Cloud Function CI/CD has degraded. Firebase enforces a quota for Cloud Function deployment of 80 writes per 100 seconds. As far as I can tell, the quota has existed for a while.

But recently, Cloud Function deployments started failing silently upon hitting this quota. That’s tricky because 80 endpoints aren’t all that many, and Firebase has not exposed a clean way to deploy only Cloud Functions that changed.

K-Optional Software received multiple consultation requests for this issue on projects we don’t own at roughly the same time, pointing to a sudden and inconvenient API change.

I’ve considered two workarounds:

- Use a single Cloud Function which invokes conditional logic- say, with an event dispatcher- based upon an event name. That might look like a single function called

dispatcherFunctionwhich switches oneventName, calling internal functions accordingly. - Instill a convention where every Cloud Function corresponds to its own file. In the CI code, filter out files that have not changed and deploy functions corresponding to the files that have.

Needless to say, both of these workarounds leave a lot to be desired. Cramming routing logic into an endpoint sacrifices readability and HTTP-level caching, and the scaffolding approach doesn’t help large existing projects.

GCP Favoritism Part 2

Finally, Firebase increasingly shepherds users over to GCP for essential services. Intermittently over the past few months, developers reported failures on Firebase Hosting due to missing permissions. Our team began reporting this issue last week. Why Firebase Hosting requires Cloud Function list authorization confounds me. In any event, Google Cloud Console provides the sole means for adding this permission.

I’ve caught myself on this permissions dashboard a lot recently in spite of a down-tick in Firebase Development.

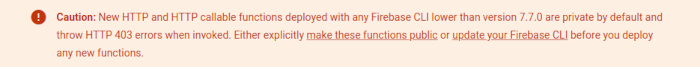

From the Cloud Function Deployment docs: A Firebase error solved on Google Cloud only.

From the Cloud Function Deployment docs: A Firebase error solved on Google Cloud only.

Supabase

We’ve developed a few small projects on Supabase recently as a part of our prospecting process. The developer experience has been delightful, particular Row Level Security, the more powerful analog to Firestore Rules. That Supabase is betting on Deno for their serverless function suite indicates to us that they are serious about great technology.

We love PostgreSQL which Supabase utilizes. We plan to do more research on scalability, since column-based * SQL databases can’t grow as big as their NoSQL counterparts. Nonetheless, Supabase came at the right time.

* Edit: poor choice of words